Blog Archive for / 2008 / 11 /

Memory Models and Synchronization

Monday, 24 November 2008

I have read a couple of posts on memory models over the couple of weeks: one from Jeremy Manson on What Volatile Means in Java, and one from Bartosz Milewski entitled Who ordered sequential consistency?. Both of these cover a Sequentially Consistent memory model — in Jeremy's case because sequential consistency is required by the Java Memory Model, and in Bartosz' case because he's explaining what it means to be sequentially consistent, and why we would want that.

In a sequentially consistent memory model, there is a single total order of all atomic operations which is the same across all processors in the system. You might not know what the order is in advance, and it may change from execution to execution, but there is always a total order.

This is the default for the new C++0x atomics, and required for

Java's volatile, for good reason — it is

considerably easier to reason about the behaviour of code that uses

sequentially consistent orderings than code that uses a more relaxed

ordering.

The thing is, C++0x atomics are only sequentially consistent by default — they also support more relaxed orderings.

Relaxed Atomics and Inconsistent Orderings

I briefly touched on the properties of relaxed atomic operations in my presentation on The Future of Concurrency in C++ at ACCU 2008 (see the slides). The key point is that relaxed operations are unordered. Consider this simple example with two threads:

#include <thread>

#include <cstdatomic>

std::atomic<int> x(0),y(0);

void thread1()

{

x.store(1,std::memory_order_relaxed);

y.store(1,std::memory_order_relaxed);

}

void thread2()

{

int a=y.load(std::memory_order_relaxed);

int b=x.load(std::memory_order_relaxed);

if(a==1)

assert(b==1);

}

std::thread t1(thread1);

std::thread t2(thread2);

All the atomic operations here are using

memory_order_relaxed, so there is no enforced

ordering. Therefore, even though thread1 stores

x before y, there is no guarantee that the

writes will reach thread2 in that order: even if

a==1 (implying thread2 has seen the result

of the store to y), there is no guarantee that

b==1, and the assert may fire.

If we add more variables and more threads, then each thread may see a different order for the writes. Some of the results can be even more surprising than that, even with two threads. The C++0x working paper features the following example:

void thread1()

{

int r1=y.load(std::memory_order_relaxed);

x.store(r1,std::memory_order_relaxed);

}

void thread2()

{

int r2=x.load(std::memory_order_relaxed);

y.store(42,std::memory_order_relaxed);

assert(r2==42);

}

There's no ordering between threads, so thread1 might

see the store to y from thread2, and thus

store the value 42 in x. The fun part comes because the

load from x in thread2 can be reordered

after everything else (even the store that occurs after it in the same

thread) and thus load the value 42! Of course, there's no guarantee

about this, so the assert may or may not fire — we

just don't know.

Acquire and Release Ordering

Now you've seen quite how scary life can be with relaxed operations, it's time to look at acquire and release ordering. This provides pairwise synchronization between threads — the thread doing a load sees all the changes made before the corresponding store in another thread. Most of the time, this is actually all you need — you still get the "two cones" effect described in Jeremy's blog post.

With acquire-release ordering, independent reads of variables written independently can still give different orders in different threads, so if you do that sort of thing then you still need to think carefully. e.g.

std::atomicx(0),y(0); void thread1() { x.store(1,std::memory_order_release); } void thread2() { y.store(1,std::memory_order_release); } void thread3() { int a=x.load(std::memory_order_acquire); int b=y.load(std::memory_order_acquire); } void thread4() { int c=x.load(std::memory_order_acquire); int d=y.load(std::memory_order_acquire); }

Yes, thread3 and thread4 have the same

code, but I separated them out to make it clear we've got two separate

threads. In this example, the stores are on separate threads, so there

is no ordering between them. Consequently the reader threads may see

the writes in either order, and you might get a==1 and

b==0 or vice versa, or both 1 or both 0. The fun part is

that the two reader threads might see opposite

orders, so you have a==1 and b==0, but

c==0 and d==1! With sequentially consistent

code, both threads must see consistent orderings, so this would be

disallowed.

Summary

The details of relaxed memory models can be confusing, even for experts. If you're writing code that uses bare atomics, stick to sequential consistency until you can demonstrate that this is causing an undesirable impact on performance.

There's a lot more to the C++0x memory model and atomic operations than I can cover in a blog post — I go into much more depth in the chapter on atomics in my book.

Posted by Anthony Williams

[/ threading /] permanent link

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

First Review of C++ Concurrency in Action

Monday, 24 November 2008

A Dean Michael Berris has just published the first review of C++ Concurrency in Action that I've seen over on his blog. Thanks for your kind words, Dean!

C++ Concurrency in Action is not yet finished, but you can buy a copy now under the Manning Early Access Program and you'll get a PDF with the current chapters (plus updates as I write new chapters) and either a PDF or hard copy of the book (your choice) when it's finished.

Posted by Anthony Williams

[/ news /] permanent link

Tags: review, C++, cplusplus, concurrency, book

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

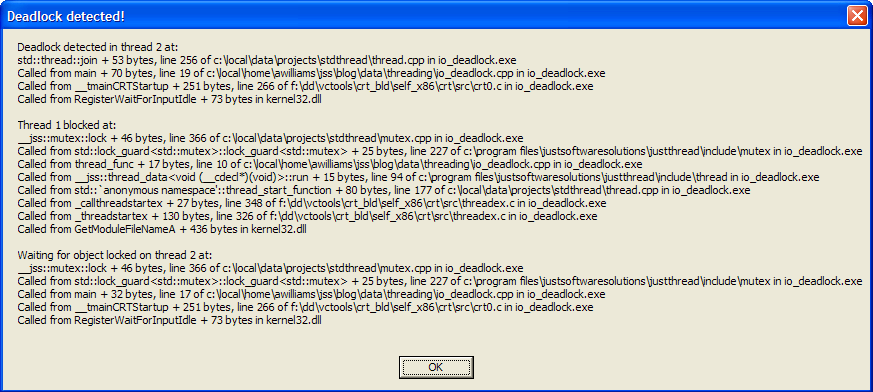

Deadlock Detection with just::thread

Wednesday, 12 November 2008

One of the biggest problems with multithreaded programming is the

possibility of deadlocks. In the excerpt from my

book published over at codeguru.com (Deadlock:

The problem and a solution) I discuss various ways of dealing with

deadlock, such as using std::lock when acquiring multiple

locks at once and acquiring locks in a fixed order.

Following such guidelines requires discipline, especially on large

code bases, and occasionally we all slip up. This is where the

deadlock detection mode of the

just::thread library comes in: if you compile your

code with deadlock detection enabled then if a deadlock occurs the

library will display a stack trace of the deadlock threads

and the locations at which the synchronization

objects involved in the deadlock were locked.

Let's look at the following simple code for an example.

#include <thread>

#include <mutex>

#include <iostream>

std::mutex io_mutex;

void thread_func()

{

std::lock_guard<std::mutex> lk(io_mutex);

std::cout<<"Hello from thread_func"<<std::endl;

}

int main()

{

std::thread t(thread_func);

std::lock_guard<std::mutex> lk(io_mutex);

std::cout<<"Hello from main thread"<<std::endl;

t.join();

return 0;

}

Now, it is obvious just from looking at the code that there's a

potential deadlock here: the main thread holds the lock on

io_mutex across the call to

t.join(). Therefore, if the main thread manages to lock

the io_mutex before the new thread does then the program

will deadlock: the main thread is waiting for thread_func

to complete, but thread_func is blocked on the

io_mutex, which is held by the main thread!

Compile the code and run it a few times: eventually you should hit the deadlock. In this case, the program will output "Hello from main thread" and then hang. The only way out is to kill the program.

Now compile the program again, but this time with

_JUST_THREAD_DEADLOCK_CHECK defined — you can

either define this in your project settings, or define it in the first

line of the program with #define. It must be defined

before any of the thread library headers are included

in order to take effect. This time the program doesn't hang —

instead it displays a message box with the title "Deadlock Detected!"

looking similar to the following:

Of course, you need to have debug symbols for your executable to get meaningful stack traces.

Anyway, this message box shows three stack traces. The first is

labelled "Deadlock detected in thread 2 at:", and tells us that the

deadlock was found in the call to std::thread::join from

main, on line 19 of our source file

(io_deadlock.cpp). Now, it's important to note that "line 19" is

actually where execution will resume when join returns

rather than the call site, so in this case the call to

join is on line 18. If the next statement was also on

line 18, the stack would report line 18 here.

The next stack trace is labelled "Thread 1 blocked at:", and tells

us where the thread we're trying to join with is blocked. In this

case, it's blocked in the call to mutex::lock from the

std::lock_guard constructor called from

thread_func returning to line 10 of our source file (the

constructor is on line 9).

The final stack trace completes the circle by telling us where that

mutex was locked. In this case the label says "Waiting for object

locked on thread 2 at:", and the stack trace tells us it was the

std::lock_guard constructor in main

returning to line 17 of our source file.

This is all the information we need to see the deadlock in this case, but in more complex cases we might need to go further up the call stack, particularly if the deadlock occurs in a function called from lots of different threads, or the mutex being used in the function depends on its parameters.

The just::thread deadlock

detection can help there too: if you're running the application from

within the IDE, or you've got a Just-in-Time debugger installed then

the application will now break into the debugger. You can then use the

full capabilities of your debugger to examine the state of the

application when the deadlock occurred.

Try It Out

You can download sample Visual C++ Express 2008 project for this

example, which you can use with our just::thread

implementation of the new C++0x thread library. The code should also

work with g++.

just::thread

doesn't just work with Microsoft Visual Studio 2008 — it's also

available for g++ 4.3 on Ubuntu Linux. Get your copy

today and try out the deadlock detection feature

risk free with our 30-day money-back guarantee.

Posted by Anthony Williams

[/ threading /] permanent link

Tags: multithreading, deadlock, c++

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Detect Deadlocks with just::thread C++0x Thread Library Beta V0.2

Saturday, 01 November 2008

I am pleased to announce that the second beta of just::thread, our C++0x Thread Library is

available, which now features deadlock detection for uses of

std::mutex. You can sign up at the just::thread Support

forum to download the beta or send an email to beta@stdthread.co.uk.

The just::thread library is a complete implementation

of the new C++0x thread library as per the current

C++0x working paper. Features include:

std::threadfor launching threads.- Mutexes and condition variables.

std::promise,std::packaged_task,std::unique_futureandstd::shared_futurefor transferring data between threads.- Support for the new

std::chronotime interface for sleeping and timeouts on locks and waits. - Atomic operations with

std::atomic. - Support for

std::exception_ptrfor transferring exceptions between threads. - New in beta 0.2: support for detecting deadlocks with

std::mutex

The library works with Microsoft Visual Studio 2008 or Microsoft Visual C++ 2008 Express for 32-bit Windows. Don't wait for a full C++0x compiler: start using the C++0x thread library today.

Sign up at the just::thread Support forum to download the beta.

Posted by Anthony Williams

[/ news /] permanent link

Tags: multithreading, concurrency, C++0x

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Design and Content Copyright © 2005-2025 Just Software Solutions Ltd. All rights reserved. | Privacy Policy